Our Data Future

Our digital environment is changing, fast. Nobody knows exactly what it’ll look like in five to ten years’ time, but we know that how we produce and share our data will change where we end up. We have to decide how to protect, enhance, and preserve our rights in a world where technology is everywhere and data is generated by every action. Key battles will be fought over who can access our data and how they may use it. It’s time to take action and shape our future.

By Valentina Pavel, PI Mozilla-Ford Fellow, 2018-2019

Here’s where we start off

We are at a crossroads. We need to address challenges posed by technological choices made by governments and dominant companies – resulting in the erosion of privacy and the centralisation of power. There are also promising opportunities. We have fascinating technologies being developed. Legal safeguards are emerging still -- for instance in Europe people have a strong privacy rights embedded in the European Convention on Human Rights, and in the EU data protection rules are providing a new base standard for protections with GDPR. Courts across the world are responding to interesting challenges, whether India's ruling in 2016 on the right to privacy and Jamaica's on the identification system, and the U.S. on cell phone location data. Countries around the world are also strengthening data protection laws – today more countries have some form of data protection than don’t.

But most importantly, we have the power to unite and transform. The Internet and the future built upon it could be a collective of action-driven critical thinkers. We can change the course of our path. The future is not a given: our actions and decisions will take us where we want to go.

In today’s digital environment, here’s what we’re not OK with:

Data monopolies and surveillance

First, we don't like data monopolies. Big tech companies harvest our data on a massive scale, and exploit it for their own interests. They are the new feudal lords. They aspire to surveil, predict and automate our lives and societies.

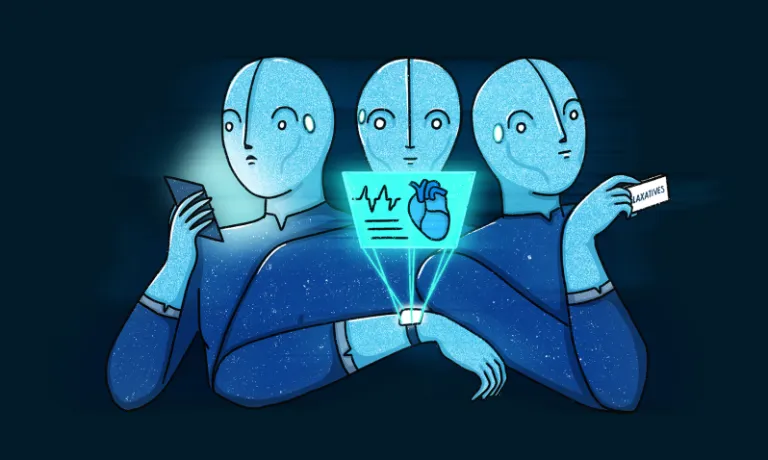

Surveillance machines like Alexa and Google Assistant have entered into homes. They listen carefully to what we say, and they watch closely what we do.

Mobile devices and wearables travel with us everywhere we go, extracting and sharing data about our every footstep. Publicly and privately, we are being watched.

This is not just passive surveillance, it's active control over our lives and social structures. Companies predict our behaviour and infer our interests, potentially knowing us better than we know ourselves. They offer an illusion of choice, when in fact they can decide which information reaches us and which doesn't. They use dark patterns to discourage us from exercising our rights.

Things happening behind our backs

It’s not just the data we knowingly generate – such as photos or posts on social media – that gets harvested. It’s also the data we indirectly generate: our location history, browsing activity, what devices we use and lots more information that has been derived, inferred or predicted from other sources. For example, from my browsing patterns, companies can predict my gender, income, number of children, my shopping habits, interests and insights about my social life. While in many countries data protection laws allow me to access all the data that online tracking companies collect, in most other places, I can’t. But things are not that simple. Even in the places where I can access data about me, I first need to know which company is collecting data. It’s not a surprise that often I am unaware of the pervasive tracking. If I do find out, asking a company for access to data is not a trivial task. It might be full of obstacles and difficulties – some intentional, some because of bad design.

Restrictions on freedom of expression

Internet platforms limit our freedoms at an unprecedented scale. They monitor and decide what content is allowed on their apps and websites. They built algorithms that decide what content is allowed and what isn’t. What’s worse, in Europe, a new copyright law adopted in March 2019, forces the power on platforms to surveil, censor and dictate how we express and communicate with each other online.

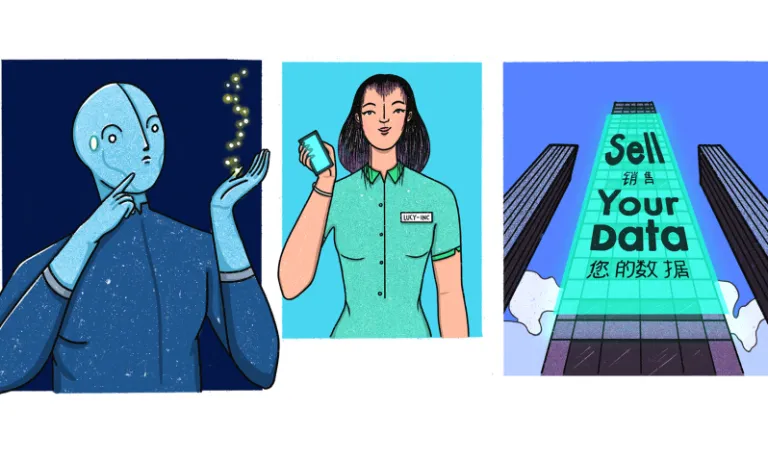

'Free' services

We don’t believe in 'free' services anymore – we know our data is being exploited. Abusive data practices are used to track, target and influence our behaviour and opinions. In 2018, Cambridge Analytica showed us how this practice undermines democratic processes and weakens democracies.

This is not to say that the solution to 'free' services is paying for privacy-enhanced products. Privacy should not be something you have to pay for. And companies offering 'free' services should not use them to mask data exploitation practices.

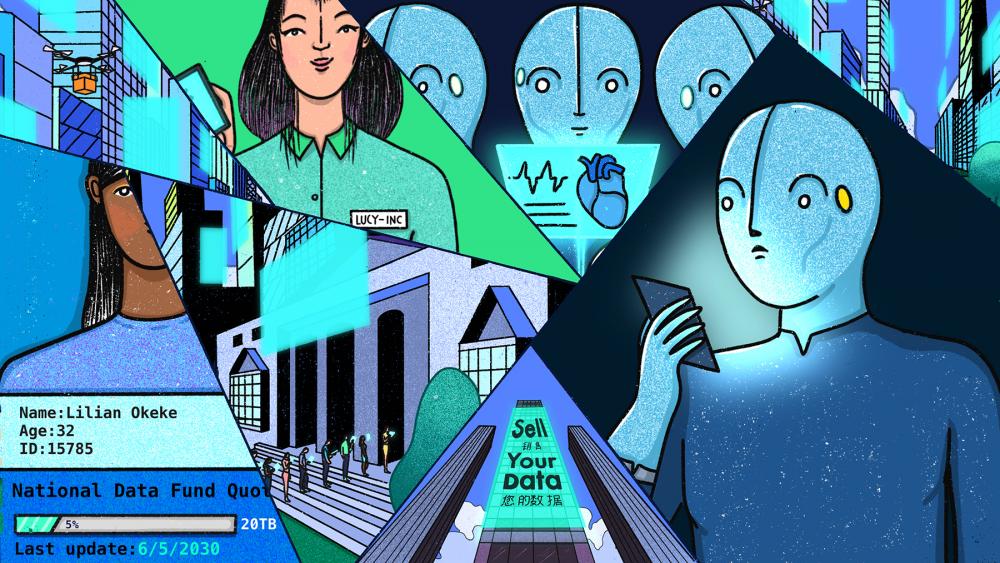

How? Here’s where our journey begins. Below are four possible future scenarios. They’re presented through the eyes of Amtis, a persona representing an everyday individual caught up in the digital challenges of the future.

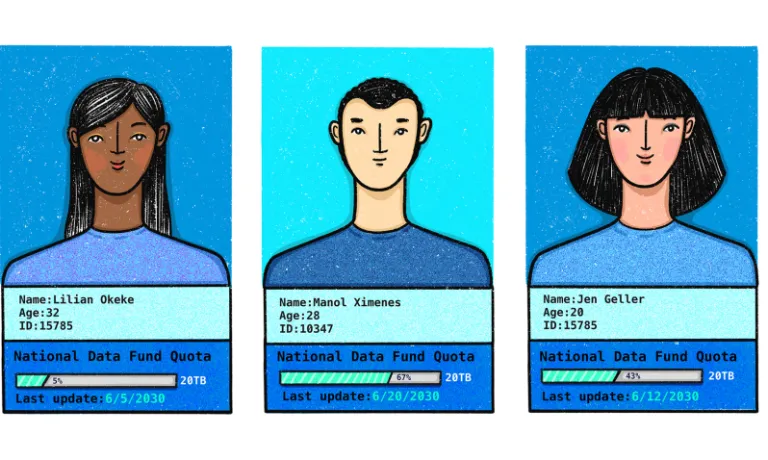

Amtis travelled forward in time and found four different futures: one where data is treated like property and data markets are created; one where people are paid for data as labour; one where data is stored in nationalised funds; and one where users have clear rights concerning their data.

Here’s what I collected from Amtis’ diary. I’ve added some of my own reflections at the end of each chapter as well, just to spice things up a bit :)

NEXT STEPS

How do you see YOUR future? Tell us your first step for shaping it.

Don’t know exactly what your first step should be? Here are some suggestions:

1. Elections around the world are coming up. Ask candidates what role they think data plays in the future. If you see a lack of foresight, engage in a deeper discussion with them. Make them listen and use the scenarios to explain how things might go wrong.

2. Start a data rights hub in your community. There are a few discussion forums that are dedicated to thinking about what happens next and how to address our data challenges. For example, check out the My Data community and the ResponsibleData.io mailing list where you can engage in conversations on the themes discussed in the scenarios.

3. Your creative skills are needed for spreading the data discussion more broadly. Reach out to us if you want to contribute to building a toolkit to make it easier for your friends, your neighbours and the world to engage with the topic. We would all benefit from sharing this topic with more communities!

If you want to engage with this work further and write a comic book, a song, make an art exhibition, an animation or a short video, or contact PI.

I want to give special thanks to everybody who helped me shape and improve this project: My Privacy International and Mozilla colleagues, my fellow fellows Maggie Haughey, Slammer Musuta, Darius Kazemi and many other friends and wonderful people: Andreea Belu, Alexandra Ștefănescu, Lucian Boca, Josh Woodard, Philip Sheldrake, Christopher Olk, Tuuka Lehtiniemi, and Renate Samson.

Illustrations by Cuántika Studio (Juliana López - Art Director and Illustrator, Sebastián Martínez - Creative Director).

Copyediting by Sam DiBella.

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Suggested citation: Our Data Future, by Valentina Pavel, former Mozilla Fellow embedded at Privacy International, project and illustrations licensed under CC BY-SA 4.0.

As part of our Our Data Future series, we explore a future where we have rights over our data

As part of our Our Data Future series, we explore a dark future where governments have all the control over our data

As part of our Our Data Future series, we explore a dark future of deepening gaps

As part of our Our Data Future series, we explore a dark future with an illusion of control