All we want for Christmas is... Clearview AI to be banned (and looks like it's happening)!

Bit by bit regulators across the world are chipping off the toxic business model that Clearview AI relies on.

Updated on 16 August 2022

On 29 November 2021, the UK data protection authority (ICO) found "alleged serious breaches of the UK's data protection laws" by Clearview AI, and issued a provisional notice to stop further processing of the personal data of people in the UK and to delete it. It also announced its "provisional intent to impose a potential fine of just over £17 million" on Clearview AI. On 23 May 2022, the ICO issued its final decision, imposing a fine of over £7.5 million on the company, and ordering it to delete and stop processing data of UK residents.

On 16 December 2021, the French data protection authority (CNIL) found Clearview's data processing of French residents illegal and ordered it to cease processing and to delete the data within two months.

On 10 February 2022, the Italian data protection authority (Garante) found "several infringements by Clearview AI", fined the company €20 million, and ordered it to delete and stop processing data of Italian residents.

On 13 July 2022, the Greek data protection authority (Hellenic DPA) imposed a €20 million fine on Clearview AI, the highest fine it ever imposed, and also required Clearview AI to delete and stop processing data of data subjects located in Greece.

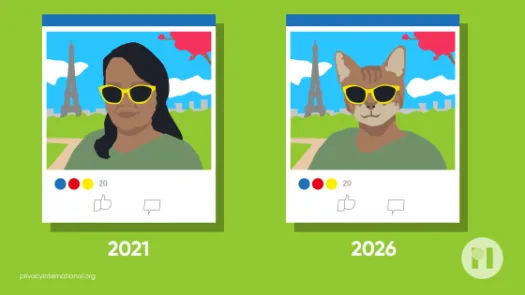

The notorious Clearview AI first rose to prominence in January 2020, following a New York Times report. Put simply, Clearview AI is a facial recognition company that uses an “automated image scraper”, a tool that searches the web and collects any images that it detects as containing human faces. All these faces are then run through its proprietary facial recognition software, to build a gigantic biometrics database.

What this means is that without your knowledge, your face could be stored indefinitely in Clearview AI’s face database, accessed by a wide variety of strangers, and linked to all kinds of other online information about you.

You might be wondering why they do this: because they make money by giving access to their huge database of faces to the police and even private companies. In doing so, Clearview relies on a rather corrosive albeit simple premise: If you are out in public or post something online, then it's somehow fair game for them to exploit you and your data for their advantage! This is what Clearview says. So, does this allow Clearview to ‘grab' data that does not belong to the firm and use it as the firm sees fit so that it can set up and profit from shady agreements with law enforcement? Nope, we don't think so.

And regulators didn't think so either. Data protection regulators in Canada, Sweden, Germany and Australia were among the first ones to condemn Clearview's data practices. But sadly their decisions didn't seem to be enough to bring down the company's aspirations and any other company aspiring to be like Clearview (see the corporate opportunism the COVID-19 pandemic gave rise to).

That's why back in May 2021, PI teamed up with 3 other organisations and filed complaints before several regulators in the EU. Specifically, PI filed complaints with the data protection authorities in the UK and France. Hermes Center for Transparency and Digital Human Rights, Homo Digitalis and noyb - the European Center for Digital Rights filed complaints before the Italian, Greek, and Austrian regulators, respectively.

In our complaints, we argued that Clearview's technology and its use further the very harms that European data protection legislation was designed to remedy. Our goal was to put pressure on regulators to take coordinated enforcement action to protect individuals from these highly invasive and dangerous practices.

We were delighted to see that our complaints either assisted regulators with existing investigations they had opened into the company and triggered new investigations. For example, the Greek data protection authority is currently investigating the complaint submitted by Homo Digitalis and, in a similar vein, the Austrian regulator has asked Clearview to provide clarifications and address the concerns contained in noyb's complaint.

In the UK, the Information Commissioner's Office (ICO) had already commenced a joint investigation with its Australian counterpart, and announced in November 2021 its provisional intent to issue a potential fine of £17 million against the company. In its announcement, the regulator finds that Clearview AI appears to have failed to comply with UK data protection laws in several ways. It has issued a provisional notice to the company to stop further processing of the personal data of people in the UK and to delete it. The ICO’s provisional findings largely reflect the arguments put forward in the submissions we made before it in May 2021.

But there's more! Just this week the French regulator (CNIL), before which we also made a detailed submission asking the regulator to halt the company's exploitative practices, has also issued a decision ordering the company to stop collecting and processing the data of people in France!

Specifically, CNIL found that Clearview AI's practices present "a particularly strong intrusiveness" and that the company cannot escape European data protection laws. More importantly, the decision emphasises that the fact that individuals' photos are publicly accessible "is no general authorisation to re-use and further process publicly available personal data, particularly without the knowledge of the data subjects".

If there's one thing all these decisions make clear it's this: the fact that our data are available on the Internet does not make it fair game for companies like Clearview to exploit for profit.

We hope that Clearview - and every company aspiring to mimic it and every investor that hopes to profit - will learn its lesson. And we hope that we can continue being free from creeps that seek to profit from the memories we and our loved ones choose to share on the Internet.